During the holiday shopping period, one of the nation's leading e-commerce brands finally launched its online AI chatbot solution.

Within minutes of launching, enthusiastic customers were asking questions; however, the bot was already struggling to answer simple questions, such as “Where is my order?” and “Do you ship internationally?”

The confused users abandoned their carts. New support tickets added up, and the brand took an immediate hit to its reputation—in less than an hour!

This scenario isn’t rare. By the year 2025, chatbots will give instant, accurate, and human-like responses that deliver on those expectations every time.

However, without a comprehensive chatbot testing checklist, even the best AI will fail, cost businesses sales, and ultimately destroy their customers' trust.

In this guide, we will summarize a streamlined, realistic, current chatbot testing checklist in order to bring your bot to meet today's capabilities.

What is a Chatbot?

A chatbot is an AI software application that allows human conversation to be simulated through text or speech.

Chatbots can be simple and rule-based bots that follow a script or sophisticated AI mobile or virtual assistants that can interpret context, have flow, and can provide personalization.

The year 2025 will see chatbots classified into three main bot types—rule-based bots (if-then logic), AI bots (machine learning), and LLM bots (using large language models like GPT-4 or Claude).

All require different chatbot testing methods and have different challenges.

What is Chatbot testing?

Chatbot testing is the assessment and validation process of a bot or virtual assistant's functionality, performance, and user experience, so they can be designed with user needs in mind and provide accurate responses.

The goal is to discover and rectify some key issues before the bot is deployed. Failure to thoroughly test the bot can hinder the user experience before quality improvements can be made.

By 2025, chatbot testing will have advanced from testing bots only by relevance to testing machine learning models, bias detection, and ethical AI compliance.

{{cta-image}}

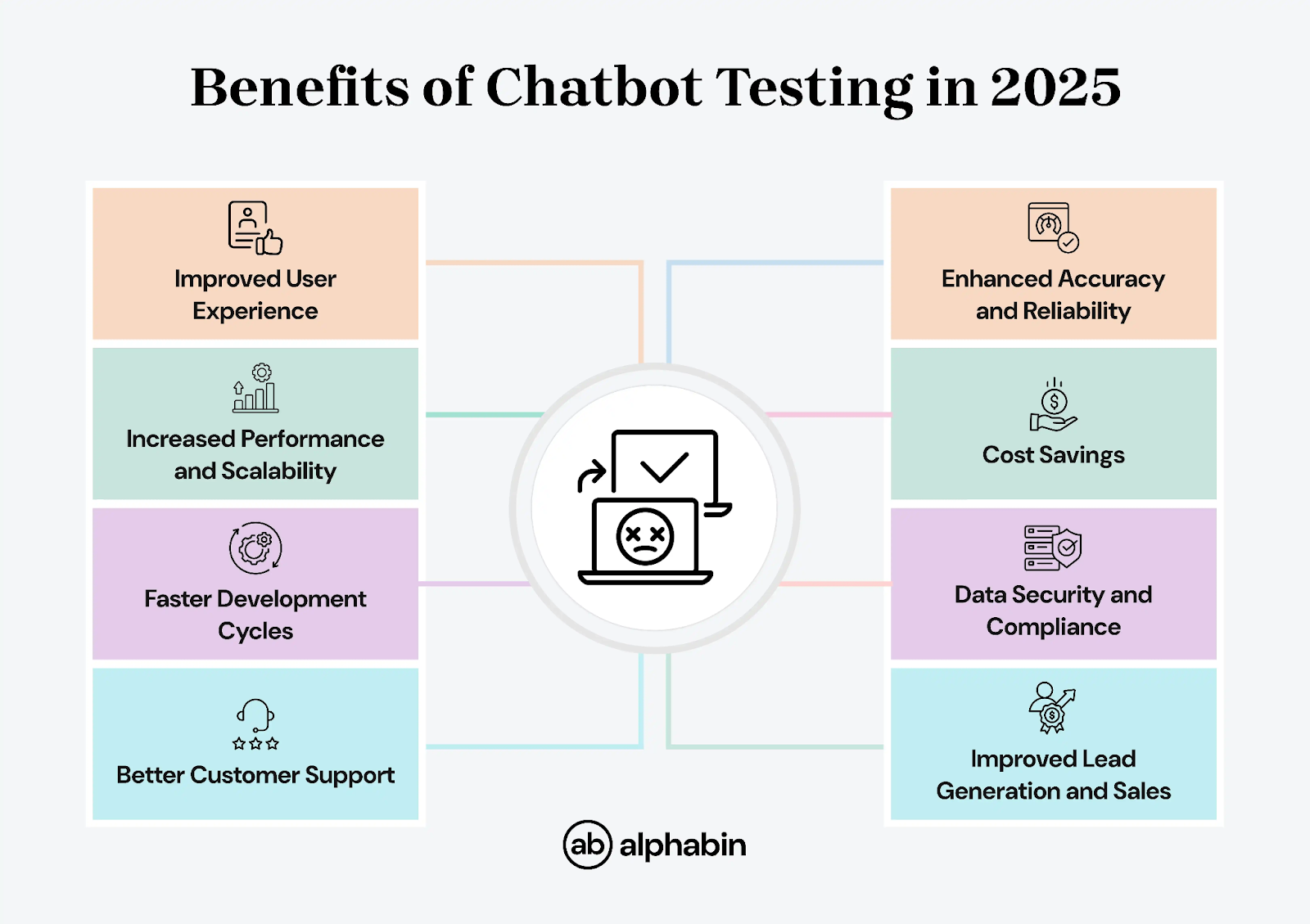

Why Chatbot Testing Is Critical in 2025?

Testing chatbots in 2025 is important because chatbots are stable AI tools that are being increasingly used across all sectors of the economy, and they are improving in complexity and integrating more deeply into workflows.

Chatbots will increasingly perform more complex tasks, be integrated into company workflows, and become a fundamental part of the overall customer experience.

A comprehensive chatbot QA checklist encompasses functionality but aligns with chatbot testing best practices in a high-demand season.

By following a detailed chatbot testing checklist, businesses can align AI capabilities with user expectations, especially in high-demand seasons.

{{blog-cta-1}}

Core Types of Chatbot Testing

Chatbot testing has a variety of specialized test approaches, which enable testing on different aspects of performance in a conversational AI interface.

By focusing on these aspects, businesses can optimize their chatbot’s functionality, as understanding the chatbot's functionality is essential for effective testing and response accuracy.

Elaboration on core testing types

Functional testing is the core of any chatbot functionality testing approach. This type of testing occurs when you test whether the bot is understanding the user's intents properly and responds accurately.

{{cta-image-second}}

This step should always be part of your chatbot testing checklist, as intent recognition accuracy directly affects response quality.

Usability testing considers the user experience aspects of the bot. Does the bot feel natural to interact with? Can the users achieve their goals without effort?

This type of testing looks at the design of the conversational flow, response timing, whether the bot sounds consistent in its personality, and overall user satisfaction.

Performance Testing will ensure that the bot will maintain an acceptable experience under real traffic loads.

Performance evaluation must be listed in your chatbot testing checklist to prevent crashes during high traffic periods.

Other important types of chatbot testing

1. NLP Testing

Testing natural language processing (NLP) confirms that the chatbot accurately understands the user inputs. Using an NLP chatbot testing framework can help evaluate intent recognition and language flexibility.

To build intent recognition and improve performance overall, adequate, high-quality, and representative training data is used.

Check for leaking data, unauthorized access, etc. Very important to ensure user data protection and capability for privacy compliance.

Additionally, test for malicious code to prevent code injection attacks and ensure the chatbot is protected against security threats.

Be certain that the chatbot works with connected systems, including CRMs, databases, payment gateways, etc. Prevent data flow failures and avoid creating errors.

4. A/B Testing

Test different chatbot versions or responses to see which one performs better. Data-driven approach to refine user experience and optimize chatbot.

5. Ad Hoc Testing

Spontaneous, unstructured testing to catch those unexpected issues or edge cases that formal test scripts might miss.

The 2025 Chatbot Testing Checklist

Before you get started, remember that testing your chatbot deployment on your site is important for checking user experience and integration.

Check through this chatbot testing checklist as you cover all the important areas of chatbot testing.

1. Define Purpose, KPIs, and Scope

Be clear about what your chatbot does: customer support, lead gen, order tracking? Set measurable goals that can include resolution rate, average handle time, and CSAT. So every test corresponds to your business goals.

Make sure your testing process is aligned with the chatbot's purpose to ensure it can deliver on its intended goals.

2. Validate Conversational Flow

Test the happy path and alternative paths users might take. Make sure conversations feel natural, have no dead ends, and users can go back or change topics.

3. Test Natural Language Understanding (NLU)

How well does your chatbot deal with variations of language, synonyms, text slang, typos, and sentence structure? Intent recognition is key to pulling accurate answers.

Your chatbot testing checklist should include tests for slang, misspellings, and multi-intent queries.

4. Check Context and Multi-Turn Conversations

Does the bot remember key details shared earlier in the conversation? For example, if a user gives their order number once, they shouldn’t have to give it again unless the context is intentionally reset.

5. Functional Dialogue Testing

Test all expected user queries and unusual or incomplete inputs. Prevents errors when users enter unexpected or out-of-flow data.

Create comprehensive chatbot test cases to validate expected and unexpected scenarios, including unusual phrasing and incomplete inputs

6. Review Response Accuracy and Hallucination Control

A modern chatbot testing checklist must include hallucination detection to avoid misinformation.

Test how the chatbot responds to unexpected or ambiguous user queries to ensure robust error handling and user satisfaction.

7. Check Personality, Tone, and Ethics

Consistent tone and brand alignment. Test for cultural sensitivity, remove bias in responses, and block offensive or harmful language.

8. Error Handling and Fallbacks

The bot responds helpfully when it doesn’t understand a question. Fallbacks guide the user to clarify their request or hand them over to a live agent.

9. Integration and API Testing

Test the connections that you have made to CRMs, payment systems, databases, or third-party APIs. Ensure that your transactions complete successfully, your data syncs, and that errors are handled properly.

Also, ensure that the chatbot has been implemented properly on the website and that the user experience will be seamless and smooth!

10. Security and Privacy Testing

Data that must be protected should be encrypted, access control should be in place, or proper data masking should be used. You should also check whether or not you are following GDPR or HIPAA.

11. Performance and Load Testing

Simulated stress testing (or extreme usage)- run your bot through the wringer and track how it manages in the sea of heavy traffic.

Track reactivity, response time, and stability with 3 to 4 different loads to ensure your service will not go offline.

12. Monitoring and Continuous Improvement

Intent success rate, escalation rate, and user satisfaction. Review conversations and A/B tests, and update flows and responses.

New Challenges with LLM-Based Chatbots

Large Language Model (LLM) chatbots operate in a completely different space compared to traditional rule-based chatbots.

While LLMs function as a chatbot, the AI models of LLMs create outputs automatically rather than following scripted conversation flows and are more non-deterministic or creative, calling for new types of evaluation methods and metrics.

Key Takeaway: Testing LLM-based chatbots follows systematic evaluation and ongoing improvement methods.

Though LLM-based counterparts exist, testing a context-aware bot has unique testing complexity and challenges that need to be addressed for better AI chatbot performance and to positively impact customer satisfaction.

{{cta-image-third}}

Traditional vs. LLM Chatbot Testing

Leveraging LLM Evaluation Frameworks

Testing LLM-powered chatbots requires more than functional checks. You also need to track hallucinations, bias, and factual accuracy.

An evaluation framework helps you measure and improve chatbot quality step by step:

- Set Goals & Metrics – Define what matters (accuracy, relevance, hallucination rate, tone, satisfaction). Use LLM scoring, but confirm with human review.

- Create a Test Dataset – Build a “golden” set of real and synthetic prompts, including slang, typos, and edge cases.

- Run Evaluations – Use tools like DeepEval, RAGAs, or Azure AI Studio to compare against expected outputs.

- Monitor Continuously – Automate your tests in your CI/CD pipeline and monitor performance in production. Regular audits control drift and bias.

{{blog-cta-2}}

Tools & Frameworks for Chatbot Testing

The chatbot testing space offers a wide range of tools, from affordable open-source options to mid-range solutions like Testim or Confident AI for automation and scalability, and enterprise-level platforms for complex AI evaluation.

The right choice depends on your chatbot type, compliance requirements, and integration needs, directly impacting testing effectiveness and ROI.

Popular Testing Tools by Budget & Platform

How to Choose the Right Tool for Your Use Case

For Startups and Small Projects: Start with open-source tools like Botium for basic functional testing and LangTest for bias detection. These are low-cost and get you started quickly.

For Growing Businesses: Invest in mid-range tools like Confident AI for LLM evaluation or Testim for automated testing. These products provide more scalability and more features as your chatbot gets more complex.

For Enterprise Deployments: Enterprise-level enterprise resources like Tricentis Tosca or DataRobot provide end-to-end testing suites with complete analytics, auditing, and reporting for compliance and integration.

Selection Criteria:

- Chatbot Type: Rule-based bots need different tools than LLM-powered systems

- Integration Requirements: Make sure it works with your dev stack

- Compliance Needs: Some industries require specific testing documentation

- Team Expertise: Consider your team’s technical skills and training needs

Conclusion

In 2025, following a comprehensive chatbot testing checklist will no longer be optional; it’s essential for trust, accuracy, and user satisfaction.

By following this chatbot QA checklist and applying chatbot testing best practices, you can ensure your bot delivers consistent, high-quality conversations.

With LLM-powered conversational AI the norm, effective testing means validating not just functionality and performance but trust, compliance, and user satisfaction.

A good testing strategy should cover technical accuracy, natural language understanding, security, and ethical safeguards.

This is exactly where Alphabin helps, With its AI first approach, Alphabin automates end-to-end chatbot testing, ensuring your bot is accurate, reliable, and ready to perform at scale.

FAQs

1. How often should I test my chatbot after launch?

Test frequently (at least every month) and after any significant updates. Frequent testing will help you catch bugs, performance regression, and new compliance risks.

2. How should I check for prompt injection attacks?

You can replicate malicious inputs that are meant to change the behavior of the chatbot. Use test cases associated with security and tools that identify unsafe responses.

3. How can I identify and correct hallucinations by a chatbot?

Run fact-check tests, pulling from verified data sources. Tag answers that have high confidence, but the facts are wrong. Then retrain the model or change the prompts.

4. How can I make sure my chatbot scales?

Run load tests where you mimic traffic spikes. Track the response times, error rates, and memory usage during peak periods to determine whether the model is performing stably under high load.

.svg)

.webp)