Imagine this: your chatbot responds to an angry customer with sarcasm, or your language model suggests different prompts for your competitor.

These aren’t just minor errors; they can break customer trust, damage your brand, and cost you big.

That’s why the testing process of Prompt Testing has become a must-have in modern AI development. It’s not just about making prompts sound good; it’s about making sure the responses are accurate, safe, ethical, and on brand.

With AI powering customer support, healthcare advice, financial recommendations, and more, insights show that untested prompts can quickly get out of hand.

In this guide, we’ll cover what prompt testing is, how it works, the best tools in 2025, and the proven practices you can follow to keep your AI systems reliable and trustworthy.

What is AI prompt testing?

AI prompt testing, also known as LLM prompt testing, is a structured process of assessing and validating prompts used with large language models (LLMs) to ensure they generate reliable, accurate, and consistent output.

Think of prompt testing as quality assurance for your AI conversations, testing different inputs, edge cases, and scenarios to ensure your prompts behave as anticipated in different contexts.

This form of testing consists of generating test cases, executing the prompts under different scenarios, measuring the quality of output, and making changes based on the results.

Just as software testing finds bugs before the code goes to production, prompt testing helps to identify trends in AI misbehavior before it goes to your users.

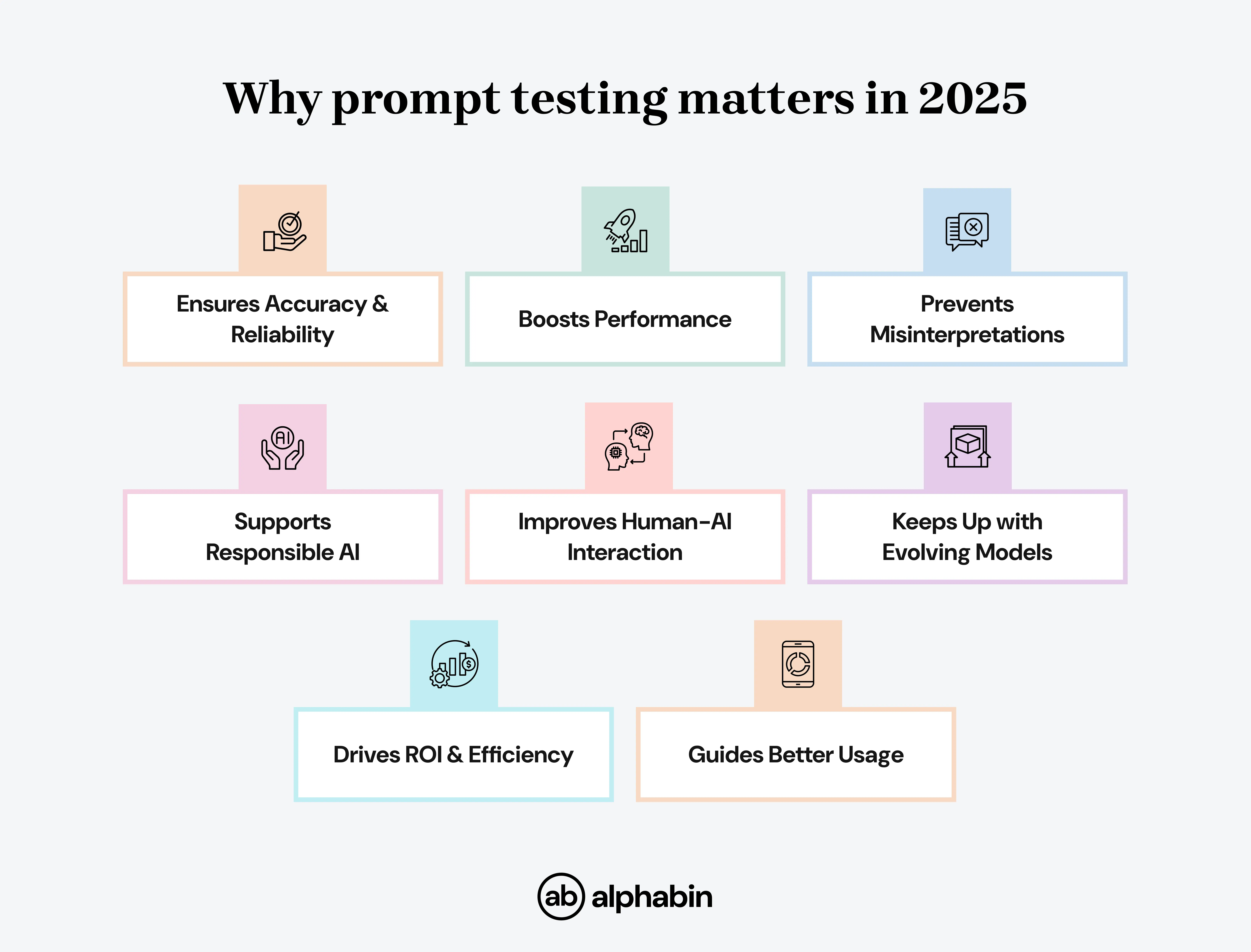

Why does it matter in 2025?

In 2025, AI prompt testing is important for the accuracy, reliability, and overall success of AI models for multiple use cases. With LLM prompt testing, enterprises can reduce risks of biased or unsafe outputs.

As AI models become more complex, prompts serve as a means to steer them toward the outcomes we want, avoid producing incorrect or misleading outputs, and ensure the responsible use of AI.

{{blog-cta-1}}

Here’s why prompt testing matters:

Methods of prompt testing.

Prompt testing methods involve evaluating the effectiveness of prompts in eliciting desired responses from AI models.

Adopting a prompt testing framework ensures systematic validation of outputs across different models.

Key techniques include:

1. Manual Testing

- Consistency Testing: Verify whether the model reproduces stable output for the same prompt.

- Edge Case Testing: Test with weird or ambiguous prompts.

- Bias Detection: Find biased answers, especially on sensitive topics.

- Intent Preservation: Ensure that prompts deliver the same intent with a similar response.

2. Automated Prompt testing

- A/B Testing: Compare the variations of a prompt to assess the most effective.

- Test Case Generation: Use the predefined prompts with expected outputs.

- Cross-Model Testing: Use the same prompts on different AI models and compare.

- Regression Testing: Don’t regress existing answers.

- Stress Testing: Test with heavy load or complex prompts.

3. Advanced Techniques

- Chain-of-Thought Testing: Break down complex tasks into step-by-step reasoning.

- Few-shot & Zero-shot Evaluation: Test with limited or no examples.

- Semantic Analysis: Test meaning, coherence, and quality, not just words.

- User Feedback: Gather input from real users to enhance outputs.

- Scenario Testing: Use realistic use cases to quantify reliability.

4. Prompt Engineering Strategies

- Clear Instructions: Keep prompt engineering testing precise and unambiguous.

- Few-Shot Examples: Show examples to guide output format.

- System Instructions: Define AI’s role and context.

- Iterative Refinement: Continuously improve prompts based on results.

{{cta-image}}

Best AI Prompt Testing Tools

There are plenty of popular and powerful prompt testing tools to build and optimize Large Language Model (LLM) applications available.

These tools have features to streamline the prompt engineering workflow around testing, versioning, evaluation, and collaboration.

While tools help you experiment and optimize, many enterprises prefer working with specialized QA providers to handle prompt testing at scale. This is where companies like Alphabin stand out.

{{blog-cta-3}}

Prompt Testing Best Practices

In 2025, Prompt testing is not a one-off project; rather, it is an ongoing effort to ensure that the AI system remains precise, safe, and reliable. Effective prompt engineering testing ensures your instructions are precise and consistent.

Here are the top best practices:

- Clarity and Specificity – Prompts should be clear and specific. Be as clear as possible on the expected format, style, or length to eliminate vague responses.

- Provide Contextual information – Provide background information, examples (few-shot), or assign roles/personas to make the AI yield accurate, useful results.

- Use Structured Formatting – Use delimiters, markdown, or specific breaks in prompts to separate the instructions from the examples and data.

- Iterative Refinement & Monitoring – Test and improve the prompts on an ongoing basis. Keep a record of outputs, analyse weaknesses, and improvise based on performance or updates to the model.

- Versioning & Management – Treat each prompt similarly to code. Version control, document any changes as you recap the prompt, and allow for any rollbacks as necessary for compliance and auditing.

- Security & Safety Checks – Employ input/output filters, red-team prompts, and adversarial testing, which can help mitigate any risks of prompt injection or producing harmful output.

- Evaluation & Validation – Monitor responses to the established criteria (accuracy, safety, bias) to ensure alignment with business or ethical goals.

- Human-in-the-Loop Oversight – Indicate human review in higher stakes or sensitive situations to provide for quality control and safety.

- Adaptability & Learning – Being aware of changing AI capabilities and prompt engineering strategies will help ensure that the prompts are appropriate in the long run.

{{cta-image-second}}

Best prompts for software testing in 2025

Below are some prompt testing examples for common QA scenarios;

1. Test Case Generation

Prompt: "Generate detailed test cases for the login feature of a mobile banking app. Include test steps, expected results, and edge cases such as invalid credentials, empty inputs, and multiple failed attempts."

Why use it: It is efficient, as it rapidly yields structured test cases with coverage around normal and edge scenarios.

2. Unit test creation

Prompt: "Write unit tests in Python using PyTest for a function that calculates discounts based on product price, discount type (percentage or flat), and user membership level."

Why use it: Because it automates the boring part of writing unit tests that adhere to best practices in the industry.

3. Regression Testing Scenarios

Prompt: "Suggest regression test cases to verify that recent UI updates in an e-commerce checkout page do not break existing payment, cart, and order history functionalities."

Why use it: It provides confidence in reliable behavior when deploying changes.

4. Security Testing Prompts

Prompt: "List possible security test cases for an API that handles payment transactions. Cover input validation, authentication, authorization, and SQL injection attempts."

Why use it: Aids in identifying vulnerabilities early by covering frequent attack vectors.

5. Performance & Load Testing

Prompt: "Design a performance test plan for a ride-hailing app backend to simulate 10,000 concurrent ride requests, including metrics to track and tools to use."

Why use it: It allows the testers to validate the performance of the system in a highly available, high-load environment.

6. Bug Reproduction & Debugging

Prompt: "Given this bug report: ‘The checkout button sometimes fails on mobile Safari,’ suggest reproducible test steps, possible root causes, and debugging strategies."

Why use it: Helps reproduce flaky issues and narrow down probable causes.

7. Compliance Testing

Prompt: "Generate test cases to verify GDPR compliance for a healthcare web app. Include scenarios for consent management, data deletion requests, and sensitive data access."

Why use it: It ensures the systems remain compliant in highly regulated and sensitive domains, providing concrete data for audit.

{{cta-image-third}}

Conclusion

Prompt testing is a necessity. It is a fundamental element of building trustworthy AI systems.

When AI is integrated into customer experiences, the dangers associated with poor or inconsistent prompts can increase quickly.

Moving ahead is straightforward: apply the right tooling, apply systematic practices, and grow your processes in conjunction with your AI activity.

Great AI is not only about great models; it is also about assuring that they perform consistently, safely, and in a predictable way, in the real world.

This guide works like a prompt testing tutorial, covering methods, tools, and prompt testing examples for 2025.

FAQs

1. How is prompt testing different from LLM evaluation?

Prompt testing checks specific prompts; LLM evaluation measures overall model performance.

2. How often should I run prompt tests?

Continuously during development, before deployments, and more often for critical apps.

3. Can prompt testing prevent all AI failures?

No, prompt testing reduces risks, but not all AI risks can be eliminated. Monitoring is still important.

4. How many test cases are enough?

Start with 50–100; scale to hundreds or thousands for complex, high-risk apps.

.svg)

.webp)