A customer visits your site, ready to buy. They open your brand-new AI Chatbot, expecting quick help but instead, they get stuck in a loop of confusing answers.

Frustration builds, the cart is abandoned, and your support team is left cleaning up angry tickets. This isn’t a design issue. It’s a conversation quality problem.

In 2025, conversational AI powers everything from customer service to healthcare to banking. One poorly tested Chatbot can cost businesses revenue, trust, and brand reputation.

That’s why AI Chatbot testing tools exist to catch broken conversation paths, misleading responses, and usability gaps before your customers ever see them.

This guide is focused on practical evaluation: how to use AI Chatbot testing tools to improve conversation quality, what features matter, and how Alphabin’s EvalBot ensures your Chatbot delivers clear, engaging dialogues across every channel.

With the right testing approach, you protect your reputation, save resources, and give users the seamless AI experience they expect.

What is an AI Chatbot Testing Tool?

An AI Chatbot testing tools is software designed to improve the performance of an AI-powered Chatbot before it reaches your end users.

The tool simulates real conversations, tests paths through the conversation, and identifies glitches, everything you'd want to fix before release.

Chatbot testing is different from traditional software testing and encompasses elements such as natural language understanding, user intent detection, and determining Chatbots' contextual understanding during conversations.

Therefore, an AI Chatbot testing tools will verify your bot's responses to different types of messages, whether it continues to be on topic, and whether it provides the right (and consistent) answers in various contexts.

These tools often evaluate the underlying AI models and large language models to ensure they generate accurate and contextually appropriate responses, which can significantly ease test maintenance.

.webp)

How AI is transforming Chatbot testing in 2025?

AI is changing the field of Chatbot testing by significantly improving speed, efficiency, and coverage.

AI Chatbot testing tools can not only automate repetitive tasks, or check patterns and analyze quantities of data, but they can also support continuous improvement by uncovering potential issues before they reach the end user experience.

Modern Chatbot testing frameworks contain machine learning algorithms that are utilized to predict user behavior patterns and predict edge cases that even the best human testers may overlook.

An AI agent can be used for end-to-end system testing, integrating and managing different components to improve error handling and context management during real-world interactions.

{{blog-cta-1}}

Here’s how AI is transforming Chatbot testing:

1. Enhanced Test Automation

AI performs the test creation, execution, and script updates repetitively, allowing for reducing manual effort and more user scenario coverage.

2. Smarter Defect Detection

AI can help with predictive analytics and anomaly detection to catch early signs of a defect, to make sure it doesn't go to production and add costly errors.

3. Improved Personalization

AI can generate varied test data sets, utilize multilingual support, and validate Chatbot usage to suit varying user requirements.

4. Cost & Time Savings

AI testing is faster, it is more efficient in terms of resource use, and provides a higher likelihood of catching defects earlier in processes, saving operational costs.

5. Ethical & Fair Testing

AI can detect bias, protect personal data, show a degree of transparency regarding Chatbot responses of decision-making, and can provide an influence on the author's voice.

Common Chatbot testing challenges

1. Unpredictable User Inputs: Users can phrase questions in unexpected and potentially countless ways; therefore, testing the variations will be tough.

2. Context Management: Ensuring that a Chatbot retains memory of previous messages and keeps conversations flowing logically.

3. Natural Language Understanding (NLU) Error: Misunderstanding user intent could result in giving an irrelevant response, or worse, an incorrect response. It is also important to ensure that Chatbot responses align with human language for accuracy and coherence.

4. Data and Tone Across Languages: Validating accuracy and tone in every variation and many languages.

5. Integration Issues: Testing across all channels, APIs, and backend integrations to assess Chatbot performance.

6. Scalability Under Load: Ensuring the bot performs normally and doesn’t lag while processing high volumes of conversations.

7. Edge Cases & Unusual Scenarios: Testing potential odd or unusual routes of a conversation that could disrupt conversational flow.

8. Bias and Fairness: Determining and eliminating biased or inappropriate responses.

{{blog-cta-2}}

Key Features of an AI Chatbot Testing Tool

When you choose AI testing software for your Chatbot verification solution, there are several essential features that will separate professional solutions from basic testing software.

The tool should provide insights into the Chatbot's performance using standardized testing and benchmarking.

- Natural Language Understanding Testing: The Chatbot testing tool should validate how effectively your Chatbot understands different phrasings, synonyms, and contextual clues. Ideally, there will be the ability to automatically create testing variations of user input.

- Conversation Flow Testing: More advanced Chatbot testing frameworks should be in the form of mapping and validating entire conversation trees, confirming logical flow, and having sufficient fallback when you go off-script in a conversation.

- Performance Metrics and Analytics: Having reporting that tracks response timing, accuracy, user satisfaction, and conversions are critical metrics that you can use to create actionable insights for continual improvements.

- Integration capabilities: The best Chatbot performance testing software will fit naturally in with your development and deployment pipelines to provide integrations that allow testing as part of your CI/CD processes.

- Support for Multiple Languages: As businesses continue to grow into multiple countries around the world, the testing software should validate Chatbot performance in the language and cultural context being measured.

Best AI Chatbot Testing Tools in 2025

1. EvalBot by Alphabin

Alphabin uses EvalBot as the measurable layer in delivery. It is an AI Chatbot testing tool you can run offline or in restricted networks. It combines fast metrics with an AI explanation so product teams know what to fix and why.

How it works

- Provide a user prompt, the Chatbot answer, and a reference answer

- The metric engine scores similarity, accuracy, completeness, relevance, and readability.

- The AI judge writes a short rationale that highlights missing concepts or format mismatches.

- Scores combine with default weights. 35 percent similarity, 25 percent accuracy, 25 percent completeness, 10 percent relevance, 5 percent readability.

- You get a weighted final grade and a clear breakdown per intent.

Why teams pick it

- Balanced and explainable. Numbers plus a short narrative.

- Fast and lightweight. No heavy infrastructure.

- Robust to paraphrases and typos with lexical and semantic checks.

This AI Chatbot testing tool is built for teams who need explainable metrics without heavy infra.

{{cta-image}}

2. Botium

A mature suite for conversation flow testing. You write flows in a readable script, connect to many channels, and run tests locally or in CI. Good for Chatbot performance testing across platforms.

How it works

- Connect a channel or NLP provider using a built-in connector.

- Write flows in BotiumScript or import transcripts.

- Add assertions for expected replies, entities, or confidence.

- Run locally with Botium CLI or in Botium Box, then export JUnit for CI.

- Review the run summary, drill into failed steps, fix, and rerun.

Where it fits

- Multichannel releases where you need pass or fail signals per channel.

- NLP analytics to spot confusing intents across engines.

- Teams that want no code options for non engineers.

Botium complements an AI Chatbot testing tool like EvalBot by covering flow logic across multiple channels.

This makes Botium a useful complement to an AI Chatbot testing tools when scaling to multiple channels.

3. TestMyBot

Open source conversation replay that runs in CI. You record scenarios or write them, commit them beside your code, and replay on every merge. Good for Chatbot regression testing with minimal overhead.

How it works

- Add TestMyBot to your repo.

- Record or author scenarios in YAML or JSON.

- Run in CI on every commit and publish JUnit XML.

- Triage failures directly in CI logs and link back to the scenario file.

- Keep a small golden set for critical intents, then expand weekly.

Where it fits

- Pipelines that already publish JUnit style results.

- Teams that want fast feedback in pull requests.

- Cases where you want to replay real transcripts as tests.

While not a full AI Chatbot testing tool, it works well alongside EvalBot for regression checks.

4. Rasa Testing Suite

First class tests for Rasa assistants. You can validate NLU and full multi turn flows with a CLI. This is the fastest path if you run Rasa today and want a pattern that scales.

How it works

- Add NLU evaluation data and story tests to your project.

- Run rasa test NLU for intent and entity accuracy and rasa test e2e for conversation flows.

- Inspect reports. confusion matrix, failed stories, coverage.

- Set thresholds and fail the pipeline when accuracy or flow success drops.

- Fix intents or stories, rerun, commit the improved baseline.

Where it fits

- Teams on Rasa that want end to end coverage in CI.

- Anyone looking for a reference design for Chatbot testing frameworks.

If you already use Rasa, this suite acts as your built-in AI Chatbot Testing Tool for NLU and flows.

5. LangTest

An open source library that stress tests your language layer. It checks robustness, fairness, and bias across many perturbations, which is essential for assistants that serve a broad audience.

Unlike a traditional AI Chatbot testing tool, LangTest focuses purely on robustness and fairness.

How it works

- Point LangTest at your model and dataset.

- Choose suites. typos, casing, paraphrase, toxicity, representation.

- Run the pack and capture accuracy deltas by perturbation.

- Review fairness and representation summaries, then create fixes.

- Track robustness trends over time and keep a quarterly target.

Where it fits

- Pre launch hardening for NLU and generation models.

- Ongoing audits for compliance and public trust.

- Complement to flow tests and CI replay.

LangTest is not a standalone AI Chatbot testing tool, but strengthens robustness for any assistant pipeline.

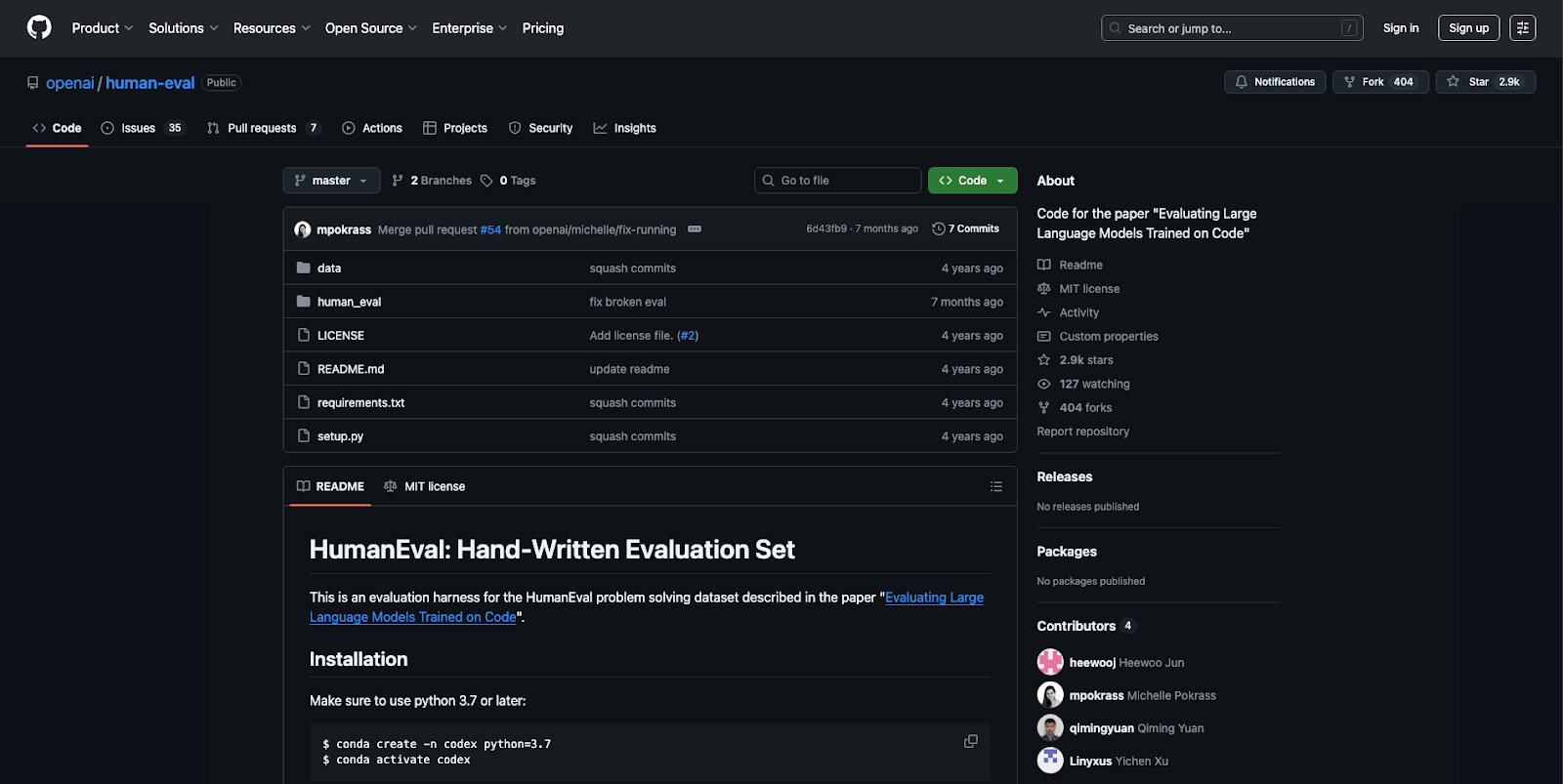

6. HumanEval

A human review loop. Evaluators rate tone, empathy, clarity, and recovery behavior on real transcripts. Useful when brand voice is as important as task success.

While not an automated AI Chatbot testing tool, HumanEval ensures human judgment on empathy and clarity.

How it works

- Define a simple rubric. tone, empathy, clarity, recovery, each on a one to five scale.

- Sample conversations across intents and languages.

- Reviewers score and add short notes on misses and good recoveries.

- Aggregate scores, flag outliers, and draft changes to prompts or flows.

- Re-test and confirm gains with automated tools and EvalBot scoring.

Where it fits

- Contact center use cases.

- Highly regulated domains.

- Markets where apologies and escalation quality matter.

It ensures human judgment complements automated AI Chatbot testing tool for brand-sensitive cases.

{{cta-image-second}}

Comparison table

How Alphabin helps you

Most teams want results, not another dashboard. Alphabin engages as a delivery partner. We use EvalBot as the AI Chatbot testing tool that turns answers into an explainable score.

We pair it with the flow and language tools you already use. We help you define thresholds, and ship a one page report that product, support, and leadership can read in under two minutes.

You keep control of your stack. We help you reach a stable baseline fast.

{{cta-image-third}}

Conclusion

As AI Chatbot testing tools are advancing rapidly in 2025, businesses can begin to deploy conversational AI with speed, accuracy, and confidence.

Botium gives you multichannel flow checks. TestMyBot brings open source replay into CI. Rasa tests validate multi turn paths and NLU. LangTest hardens the language layer for robustness and fairness. HumanEval adds human judgment where tone matters.

Alphabin brings it together with EvalBot, the AI Chatbot testing tool that produces a single, explainable score that everyone can trust. Start with your top intentions. Wire EvalBot next to your flow tests. Publish the score in every release. Teams move faster when the signal is clear.

With Alphabin, you are able to scale seamless and intelligent conversations to better serve customers while increasing business potential.

The future is for those who test smarter, not just harder!

FAQs

1. What is an AI Chatbot testing tool?

It checks multi-turn conversations, intent recognition, and flow logic. Unlike UI automation, it directly measures dialogue quality with clear pass/fail signals.

2. How is EvalBot by Alphabin different from other Chatbot testing tools?

EvalBot combines NLP metrics with an AI judge to give a weighted score plus plain-language explanations. It’s fast, offline-ready, and easy for both engineers and non-engineers to use.

3. Can Chatbot testing tools be used with CI/CD pipelines?

Yes, tools like TestMyBot, Rasa, and Botium integrate smoothly into CI/CD pipelines. EvalBot also works in restricted environments for consistent quality checks.

4. Do I need multiple Chatbot testing tools, or is one enough?

It depends, EvalBot provides explainable scores, while others cover flows, robustness, and human tone checks. Many teams combine them, with EvalBot as the central measurable layer.

.svg)

.webp)