Think about launching a new AI Chatbot for the company. After a short period, it is providing customers with inaccurate information about your return policy.

Within hours, you receive customer complaints, and the customers are annoyed. Your support team is trying to address the technology-induced chaos caused by the AI Chatbot.

This is happening far more often than you might think, simply because a large number of businesses skip a proper Chatbot evaluation platform before deploying their bot.

And the pressure is only growing. The global market for Chatbots could reach about USD 9.56 billion in 2025 (up from USD 7.76 billion in 2024), and is projected to exceed USD 27 billion by 2030, with a compound annual growth rate (CAGR) of 23.3%.

Chatbots have conversational contexts that can be much more complex than traditional software.

Testing methods for Chatbots are also generally more specialized than conventional software because we need to ensure that the bot's responses are contextually relevant and correct.

Introduction to Chatbot Evaluation Platforms

Chatbot testing platforms are tools to test, measure, and improve how well a Chatbot performs before and after it goes live.

They help businesses determine if their Chatbot can understand questions, provide accurate answers, cater to different user types, and integrate with other systems.

By using Chatbot evaluation Platforms instead of guesswork, businesses can avoid costly mistakes.

These Chatbot evaluation Platforms can test for accuracy, speed, usability, and overall conversation quality.

By using Chatbot testing platforms, the company can avoid costly mistakes, increase customer satisfaction, and see if its investment pays off.

Why evaluating Chatbots matters in 2025

In 2025, the evaluation of Chatbots is important, as they are now a common part of customer service, business workflows, and even personal productivity tools.

With 95% of customer interactions to be completed with AI (voice and text), evaluate effectively the cost versus benefit and waste to customer experience to keep some efficiency gains, along with legitimate max investment strategies for enterprises.

Here’s what you need to know about why evaluating Chatbots matters:

1. Delivering Reliable Customer Service

Evaluation makes sure chatbots provide quick, accurate, and personalized responses to keep customer satisfaction high with the least errors, a priority for all Chatbot evaluation platforms.

{{cta-image}}

2. Ensuring Consistency Across Platforms

Regular evaluations can establish that Chatbots offer the same value from all websites, applications, and social platforms using robust Chatbot testing platforms.

3. Boosting Business Efficiency & ROI

Measuring response times, resolved tickets, and automation will describe how Chatbots can save costs, measurable via Chatbot performance metrics.

4. Testing Scalability & System Integration

Testing confirms that you can evaluate a Chatbot's ability to support more users without lagging down, and you can verify that it integrates well with your company's systems.

5. Staying Ahead with AI Advancements

Ongoing evaluation allows your business to adopt the latest in natural language processing (NLP), sentiment analysis, and LLM evaluation frameworks within your chosen Chatbot evaluation platforms.

6. Future-Proofing Customer Engagement

Ongoing assessment provides your business with the ability to ensure its Chatbots are continuously effective and relevant, ensured by Automated Chatbot evaluation practices.

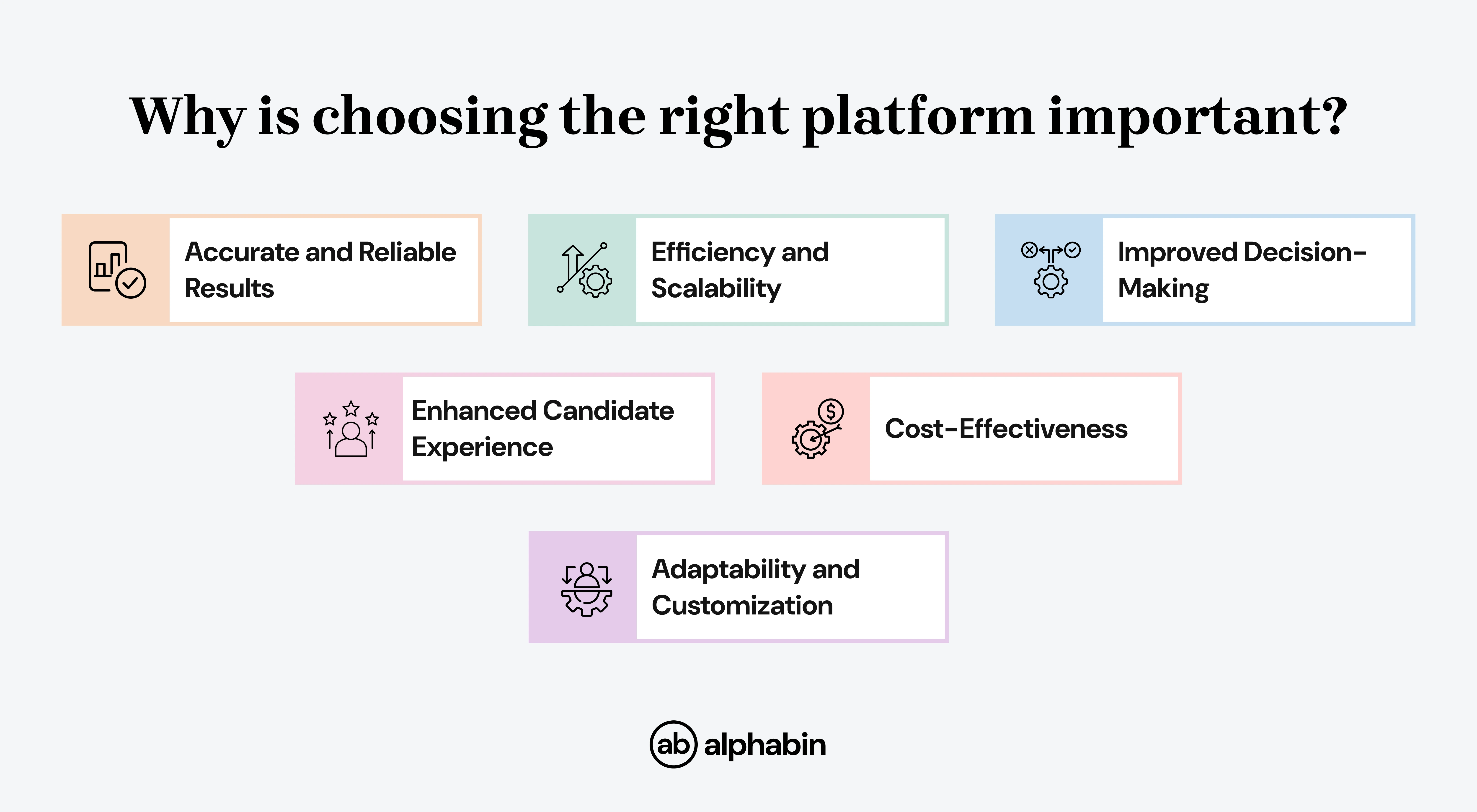

Importance of choosing the right evaluation platform

Choosing the ideal Chatbot testing platform is crucial to receive accurate, reliable, and meaningful results, which impact everything from hiring decisions to successful project implementation.

The proper platform can increase efficiencies, yield better data for decision-making, and ultimately, better outcomes using the latest AI Chatbot testing tools in 2025.

{{blog-cta-1}}

Best Chatbot Evaluation Platforms in 2025

In 2025, the Chatbot evaluation platforms will have options from open-source frameworks to enterprise solutions.

When evaluating and testing modern AI Chatbot testing tools, 2025 needs to be able to accommodate complex scientific scenarios, including multi-modal interactions, contextual conversation understanding, and performance metrics in real-time.

Top platform overviews and features

1. DeepEval: Comprehensive LLM Evaluation Framework

DeepEval is an open-source LLM evaluation framework designed for testing large-language model outputs. Similar to Pytest, it offers unit testing for LLMs with research-backed evaluation metrics.

Key Features:

- G-Eval-based assessment methodology

- Pytest-style testing for LLM outputs

- Conversation flow validation

2. ChatGPT: Versatile AI Conversational Platform

ChatGPT has established its position, excelling in reasoning, the full range of natural language capabilities, and versatility.

It has a variety of use cases (content generation, a tool for customer support, etc.), and it is often thought of as the default benchmark for determining conversational AI excellence.

Key Features:

- Context-aware conversational abilities

- Multi-turn dialogue support

- Plugin and API integrations

3. Microsoft Copilot: AI Assistant for Productivity

Microsoft Copilot is baked into Microsoft 365, allowing users to be more productive by using AI to help with tasks like writing, summarizing, and workflow automation.

Key Features:

- Deep integration with Microsoft apps

- AI-powered content and workflow generation

- Real-time collaboration features

4. Botpress: AI Agent Development Platform

Botpress provides powerful tools for creating AI-driven agents with LLM-powered automation. It's ideal for building complex, highly customizable Chatbot workflows and includes built-in evaluation capabilities.

Key Features:

- Open-core platform with plugin ecosystem

- Multi-channel deployment

- LLM automation for advanced dialogue

5. Rasa: Open-Source Conversational AI Framework

Rasa allows developers full control of Chatbot behavior and architecture, is appropriate for enterprise-level conversational AI solutions, and has a built-in testing and evaluation suite in the entire framework.

Key Features:

- On-premise or cloud deployment options

- Advanced NLP and intent recognition

- Full customization with Python-based components

6. Perplexity: AI-Powered Knowledge Assistant

Perplexity combines AI-based search and natural conversation to provide users with accurate and up-to-date information quickly, and provides retrieval in a conversation style. Its accuracy standards influence information-based Chatbot evaluation metrics.

Key Features:

- Real-time information retrieval

- Source citations for transparency

- Web and app-based accessibility

7. Grok.ai: Contextual AI for Social Platforms

Grok.ai, developed by xAI, is designed to provide witty, engaging, and context-aware conversations. It integrates with social platforms like X (Twitter) for interactive experiences and sets standards for social media Chatbot evaluation.

Key Features:

- Social media-native Chatbot design

- Real-time trending topic adaptation

- Humor-infused conversational style

Comparative summary table

Key Criteria for Evaluating Chatbot Platforms

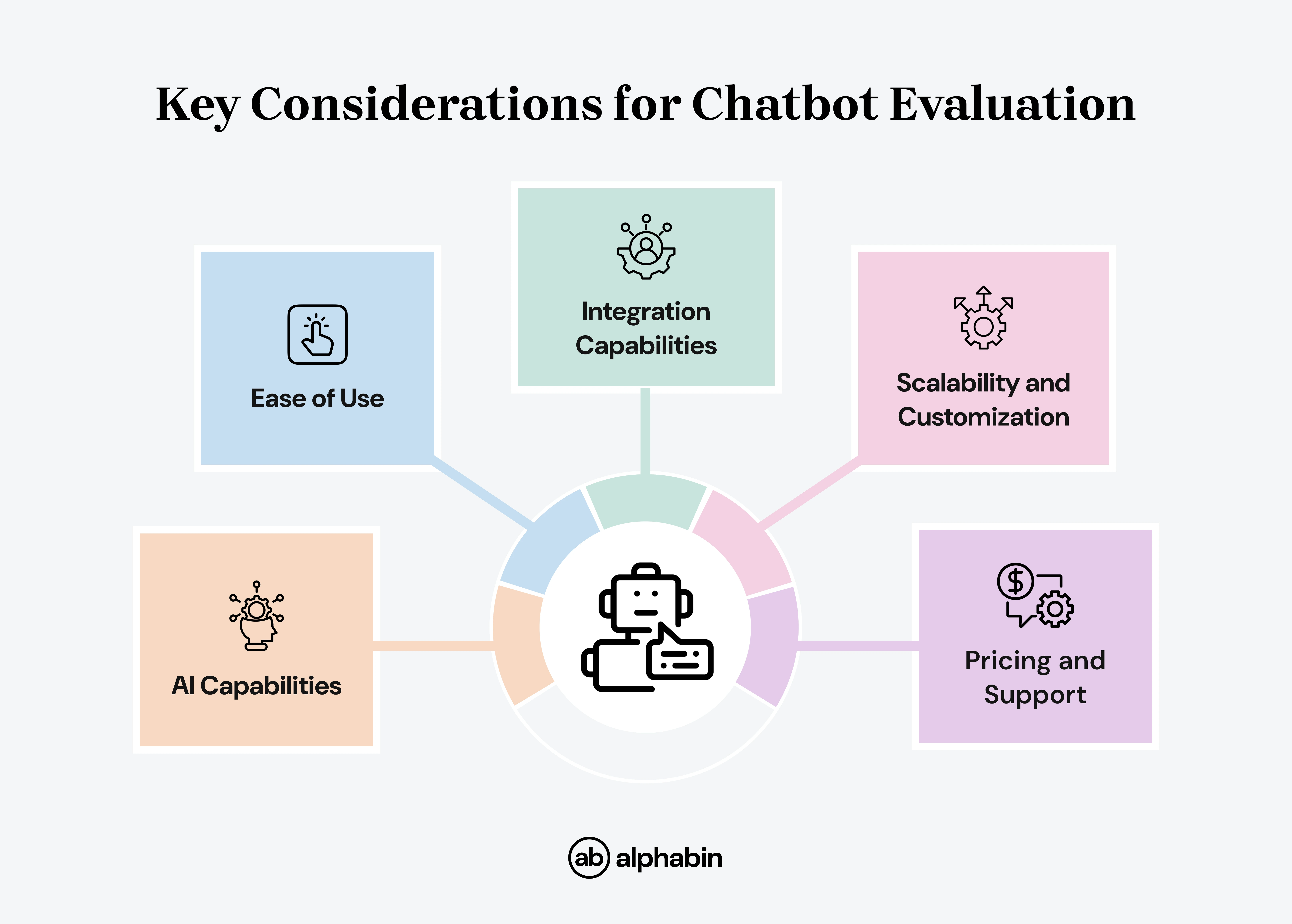

In selecting the best Chatbot testing platforms, you'll need to decide upon some evaluation criteria that will impact the performance of your Chatbot.

Today's conversational AI evaluation criteria have surpassed the simple response accuracy to include different measures of conversation quality, user experience, and production quality assurance that are now standard in leading Chatbot Evaluation Platforms.

How do these platforms help in Chatbot evaluation?

- Accuracy and Context testing – These test whether Chatbot responses are factually correct and relevant to the conversation. They simulate different conversation flows to find logical gaps or misinterpretations.

- End-to-End performance checks – They test the whole Chatbot experience from user input to final response across all channels and devices.

- Intent and Dialogue validation: By understanding user intent, these Chatbot evaluation platforms ensure accurate mapping and handling of different conversation scenarios.

- Real-Time monitoring and Feedback: Many provide live analytics, response times, conversation drop-offs, and user satisfaction scores to spot weaknesses.

- Conversation Flow optimisation: They visualise and test different dialogue paths to find dead ends, loops, or missed responses that will frustrate users.

- Multi-Language and Accessibility: These test the Chatbot for diverse audiences, accuracy, and usability across languages and regions.

- Knowledge Base and Factual Consistency: They check responses against trusted sources and consistency across multiple conversations, with no contradictions.

- Context Retention and Personality Consistency: Chatbot evaluation platforms test the Chatbot’s ability to remember previous conversations, tone, and respond in line with its personality.

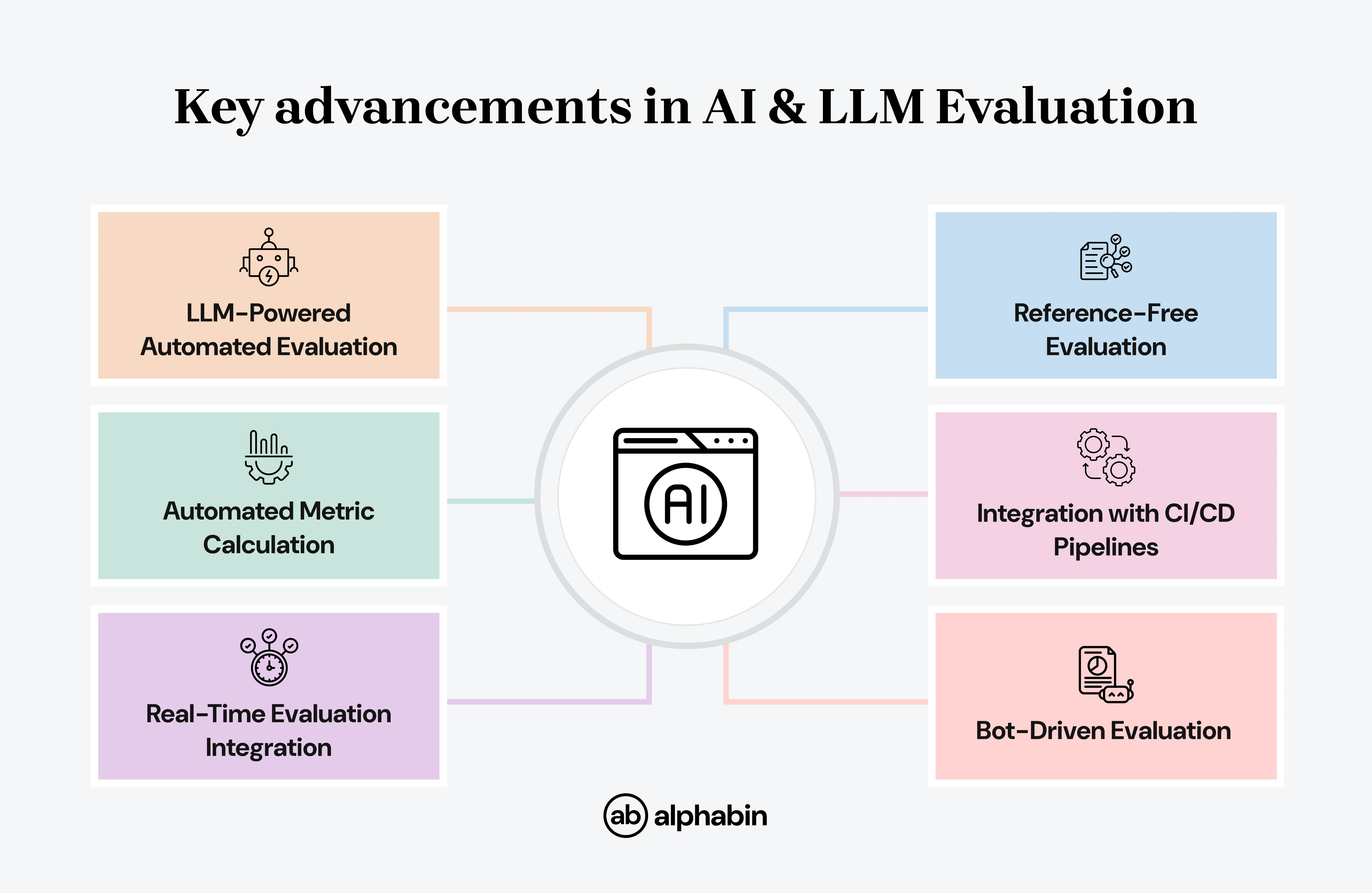

Future Trends in Chatbot Evaluation

The evolution of LLM evaluation frameworks in the year 2025 demonstrates the rapid pace of the evolution of conversational AI technology.

If Chatbots are going to keep evolving, evaluation frameworks must also keep evolving to explore greater complexity, multi-modality, and expectations of real-time performance.

Understanding the current evolution helps to position organizations in the next generation of Chatbot testing and to remain at the forefront, comparatively, of their evaluation approaches as technology evolves.

Advances in AI & LLM evaluation automation

Large Language Models (LLMs) and artificial intelligence (AI) developments have led to significant advances in the automated testing and evaluation of Chatbots.

Existing rule-based or keyword matching evaluations are being displaced by more sophisticated and nuanced evaluations of Chatbot behavior.

Choosing the Right Platform for Your Needs

In order to effectively navigate the wide variety of Chatbot evaluation platforms, you should take a systematic approach based on your organisation's functional needs, technical constraints, and growth goals.

Key factors to consider include;

- defining your needs and requirements

- evaluating platform capabilities

- determining your technical skills

- evaluating technical assistance

- budgeting and pricing

{{cta-image-third}}

Common mistakes to avoid

{{blog-cta-2}}

Conclusion

Finding the right Chatbot evaluation platform in 2025 means striking the right balance between technical capabilities, your team’s expertise, and your long-term growth goals. As Chatbots evolve into more complex, multi-turn conversational systems, the evaluation tools you choose must also advance.

With Alphabin’s, you can automate test generation, ensure higher accuracy, and keep pace with user expectations.

Remember, Chatbot evaluation is not a one-off task it’s an ongoing process that should scale with your product and your audience.

Get in touch with Alphabin today to future-proof your Chatbot testing.

FAQs

1. What metrics matter most in Chatbot evaluation?

Track accuracy, task completion, response time, sentiment, and fallback rates.

2. How do I test multi-turn conversations?

Check if the bot maintains context, coherence, and relevance across multiple exchanges.

3. When should I evaluate my Chatbot?

During design, pre-deployment, and continuously in production, for the best results.

4. Should I use automation or human feedback?

Use automation for speed and scale, and human review for tone, nuance, and complex cases.

.svg)

.webp)