I recently connected with a data engineering manager leading engineering efforts at an energy analytics company. His team processes complex data from hundreds of public sources, each with its own format, errors, and quirks. During our conversation about data quality challenges, he shared an insight that resonated deeply:

If I don't believe in the data that I'm producing as a data engineer, how should my customers believe in the data?

This principle applies directly to QA. Every day, we generate test results that teams rely on for critical decisions. But without data-driven test reporting, those results get buried in noise: flaky tests, false positives, unclear reports that make it impossible for anyone to trust them. Modern quality assurance analytics can change this entirely.

The harsh reality is that companies lose an average of 20% of their software development time dealing with unreliable tests. That's one day every week spent chasing ghosts instead of shipping features.

{{blog-cta-1}}

Why QA Waste is Killing Your Cycle Time

1. The Hidden Cost of False Alarms

Think about your last sprint. How many hours did your team spend investigating test failures that turned out to be nothing?

Google's internal study revealed that flaky tests caused 4.56% of all test failures, consuming over 2% of developer coding time. For a 50-developer team, that's equivalent to losing an entire full-time engineer, just to chase phantoms.

A GitLab survey found that 36% of teams experience monthly release delays due to test failures. Each delay can cost thousands. One estimate puts it at $27,000 per day for a product generating $10 million annually.

2. The Trust Breakdown

When tests fail randomly, teams develop workarounds. They create a "retry culture," rerunning failing tests instead of fixing issues. I've seen teams where the standard practice became "run it three times; if it passes once, ship it."

Studies show that even a 0.5% failure rate can drop your test suite pass rate below 25%. It's like trying to navigate with a compass that only works one out of four times! Eventually, you stop trusting it entirely.

3. Manual Reporting Marathon

One QA firm discovered that their client was spending 8 hours per week just compiling test reports. That's 400+ hours annually spent on data entry instead of improving quality. Time that could eliminate technical debt or expand test coverage gets consumed by spreadsheet maintenance.

That's exactly why we built Testdino.

Testdino automatically pulls your test data, categorizes failures with AI, and generates role-specific dashboards in real-time. No more spreadsheets. No more manual compilation. Just instant insights that help you ship better software.

Your QA team can finally do QA, not data entry. Schedule a call to learn more.

{{cta-image}}

Cycle Time = Your Key Metric

The data engineering manager I spoke with was crystal clear about priorities:

Cycle time is the North Star metric, and it basically tells us where to make those trade-offs.

Poor data quality cascades into waste, lost confidence, rework, and delays. The same applies to QA. When test results are unreliable, the ripple effects consume resources that could have been invested in prevention.

1. Speed Over Volume

Here's what challenged my thinking: "I would rather have fewer tests that run quickly than more tests that run slowly."

This flies against conventional QA wisdom, but the logic is sound. Fast tests integrate into developer workflows. A 10-minute test suite runs on every commit. A 2-hour suite? It has become a nightly job that developers have learned to ignore.

Test automation without proper data analytics in testing is like driving fast with your eyes closed. You need data-driven test reporting to make sense of the speed. That's where platforms like Testdino excel, by turning raw test execution data into actionable intelligence that improves QA efficiency.

2. Measuring What Matters

Cycle time metrics reveal the truth:

- How quickly do we detect real issues?

- What's the feedback loop for developers?

- How fast can we go from detection to resolution?

When you optimize for cycle time, quality follows. Teams catch bugs when they're cheap to fix, developers stay in flow, and releases become predictable.

3 Reasons Tests Fail

Understanding why tests fail is crucial for eliminating waste. After analyzing patterns across thousands of test runs, three categories emerged:

1. UI Changes: The Moving Target

UI modifications are the leading cause of test failures. Even minor adjustments like a button moving 10 pixels, a color change, or a renamed element can trigger cascading failures. These aren't bugs; they're maintenance overhead that consumes valuable QA time.

2. Real Bugs: The Actual Problems

Genuine defects cluster around new code changes. If multiple tests fail in the same module after a deployment, you've likely found a real issue. Automated testing platforms with intelligent test reporting can instantly identify these patterns.

That's why Alphabin's two-tool approach works so well. TestGenX rapidly creates comprehensive test suites, while Testdino tracks their performance over time, helping distinguish real bugs from noise through data analytics in testing.

3. Flaky Tests: The Time Thieves

Tests that randomly pass or fail without code changes represent pure waste. Network timeouts, race conditions, and external dependencies. These create unreliable tests that erode trust and consume debugging time while providing zero value.

The data engineering manager's framework for thinking about waste applies perfectly here. Each category requires a different response, yet traditional reports lump them all together.

Why Current Test Reports Don't Work

1. No Insights = No Action!

QA data is typically scattered across various tools like CI logs in one system, test results in another, and defect tracking in a separate system. Without consolidation, teams lack the holistic view needed to spot patterns or make informed decisions.

This fragmentation means teams can't track progress effectively, identify trends, or spot recurring issues that should have been fixed in previous sprints.

2. Surface Metrics Without Context

Most reports show what happened, but not why. A chart displaying "50 failed tests" provides no insight into whether these are UI breaks from a recent redesign or critical production bugs.

As the data engineering manager noted when discussing data quality, bad data isn't always obviously wrong. The same principle applies to test reports: without context, even accurate data leads to poor decisions.

3. Yesterday's News

Static end-of-sprint reports arrive too late to be actionable. By the time leadership reviews them, the codebase has evolved, new features are in development, and the insights are stale. This is where data-driven test reporting transforms the game.

With Testdino's real-time dashboards, you get live quality assurance analytics that evolve with your codebase. No more archaeology, just actionable insights.

Development moves at internet speed, but traditional reporting operates on a print newspaper timeline.

{{blog-cta-2}}

Data-Driven Test Reporting: Game Changer

1. AI-Powered Failure Classification

Modern test reporting platforms like Testdino use machine learning to automatically categorize failures. When multiple builds fail with similar patterns, the system recognizes whether it's an environment issue, a code defect, or a flaky test, eliminating hours of manual investigation.

This intelligent classification builds the confidence that the data engineering manager emphasized. When your reporting distinguishes between false alarms and real bugs, teams trust the results.

2. Predictive Intelligence

By analyzing historical patterns, AI identifies high-risk areas before they become critical. Modules with high code churn, recent architectural changes, or historical defect clusters get flagged for additional testing focus.

This shifts QA from reactive to proactive, preventing fires instead of fighting them. When test automation meets data analytics in testing, you get predictive power that traditional test reporting can't match.

We at Alphabin combine both for complete QA efficiency.

3. Real-Time Visibility

Live dashboards provide immediate insights, not weekly summaries. Teams see trends forming, catch failure spikes as they happen, and make decisions based on current data.

Our proprietary tool Testdino provides dashboards that translate technical details into business language. Instead of raw percentages, executives see risk assessments with clear factors and recommended actions.

How Testers Win with Data-Driven Reports

1. Instant Root Cause Analysis

Click on a failed test and understand why immediately. The system shows whether it matches known issues, displays historical patterns, and suggests likely causes. Teams report saving 8+ hours weekly on investigation and reporting, time redirected to quality improvements.

This is exactly what modern data-driven test reporting delivers. Instead of manually digging through logs, quality assurance analytics automatically surfaces the insights you need. Testdino takes this further by learning from your test patterns over time, making each diagnosis smarter than the last!

2. Proactive Maintenance Alerts

Smart reporting identifies problems before they cascade. When UI changes break multiple tests, the system recommends specific updates. When tests become increasingly flaky, it suggests stabilization strategies.

3. Continuous Learning

Every test execution adds to the knowledge base. Patterns emerge: which tests are problematic, which modules need attention, and which fixes improve reliability. This creates a feedback loop for continuous improvement.

The lean philosophy of eliminating waste through data-driven decisions transforms QA from a cost center into a value driver!

{{cta-image-second}}

How Organizations Benefit: Real-Time Visibility

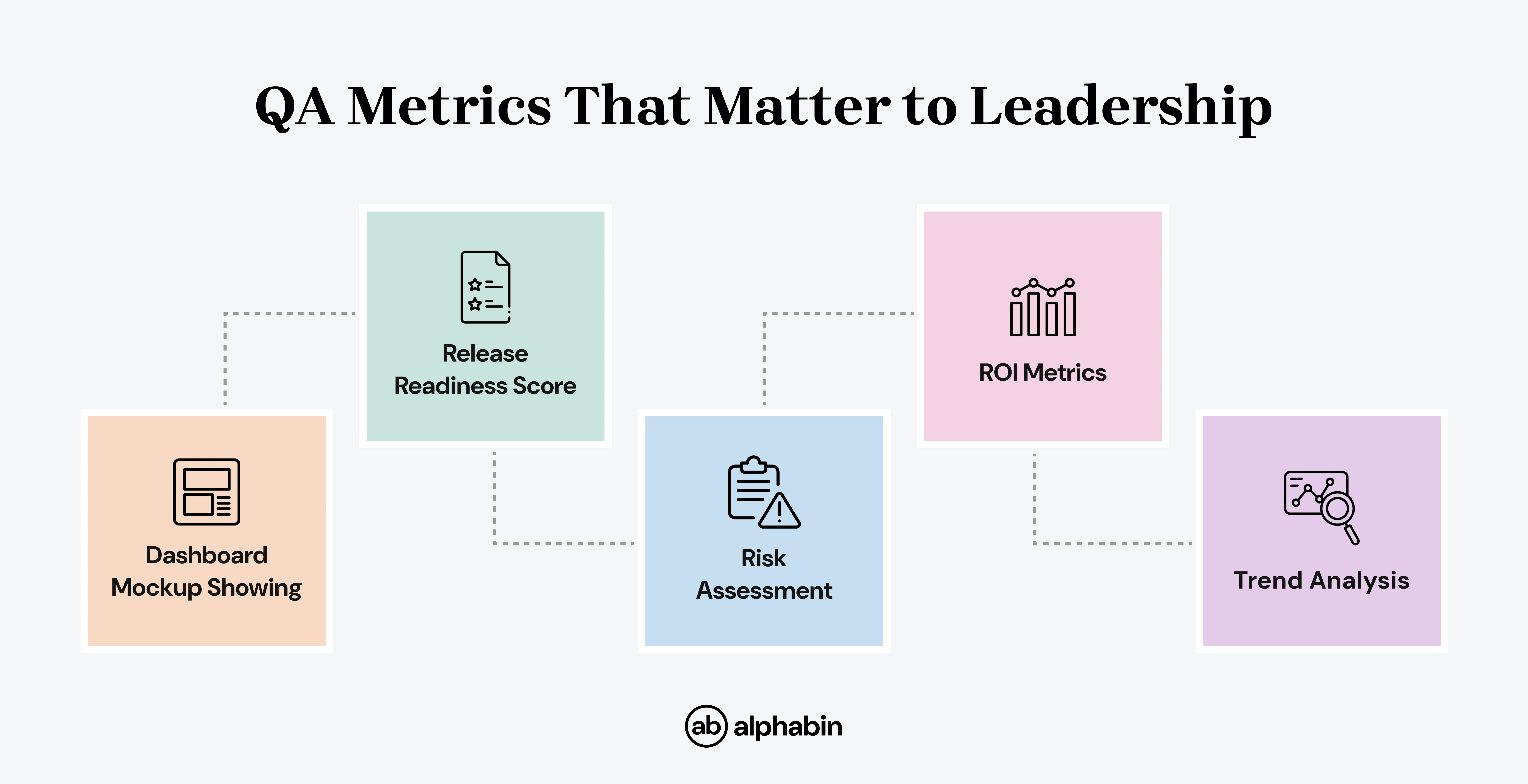

1. Executive-Ready Dashboards

Leadership needs business metrics, not technical logs. Data-driven test reporting bridges this gap by transforming automated testing results into executive-ready insights.

When data analytics in testing is done right, you see risk assessments, not test counts.

Product managers can instantly assess whether features are ready to ship and understand specific risks.

2. Strategic Resource Allocation

From a lean perspective, business value comes from eliminating waste in the system.

Clear data enables smart decisions.

- Don't waste developer time on flaky test investigations

- Focus efforts on modules with increasing real failures

- Invest in automation where it provides maximum ROI

3. Building Organizational Confidence

When Mozilla improved their test reliability and reporting transparency, developer confidence jumped 29%. This translates directly to faster development cycles and fewer production issues.

Trustworthy QA data creates a culture of confidence where teams ship faster because they trust their quality gates.

Real Success Stories

1. Enterprise Scale Impact

Major analytics platforms now serve thousands of companies, including Fortune 500 firms, processing millions of test executions annually. These organizations report dramatic reductions in analysis time through unified ML-powered insights.

2. Transformation in Action

A U.S. recruiting platform's implementation of AI-driven testing and reporting tools delivered:

- 80% test coverage achieved in 3 months

- 8 hours per week saved on reporting tasks

- 60% reduction in debugging time through flaky test identification

3. The Financial Impact

Industry analyses show that eliminating just 2% of developer time lost to test issues can save over $120,000 annually. Single-day release delays can cost $27,000 in lost revenue for mid-sized products.

These aren't marginal improvements. They're transformative changes affecting entire development organizations.

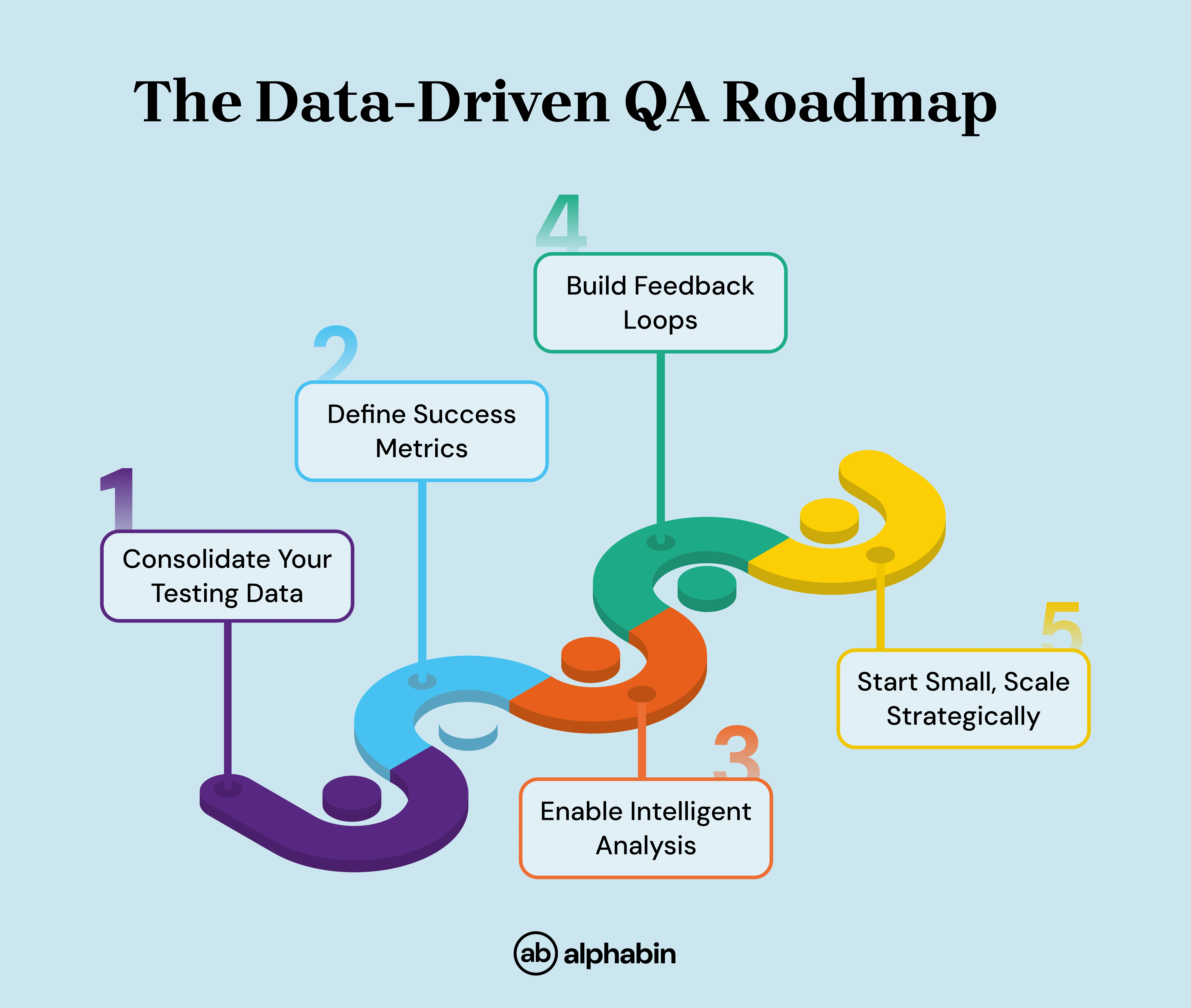

Let's Get Practical: Start Using Data-Driven Reports

Your Implementation Roadmap

{{blog-cta-3}}

Conclusion: Your QA Transformation Starts Here

The data engineering manager I spoke with spent a year teaching his teams these lean principles. The payoff? Teams operating with "freedom and autonomy" are delivering value faster than ever before.

Your QA process can achieve the same transformation. When you stop accepting waste as inevitable and start treating cycle time as sacred, everything changes.

At Alphabin, we've built this philosophy into our DNA. Our proprietary tool, TestGenX, creates test automation instantly, while Testdino provides the AI-driven reporting and analytics that make data-driven QA a reality.

We don't just provide tools; we partner with you to eliminate QA waste and accelerate your development cycle.

Ready to transform your QA from a bottleneck into a competitive advantage?

Let's talk about how Alphabin's data-driven approach can revolutionize your testing process. Because in today's market, the companies that ship quality software fastest win!

We're here to make sure that's you.

Optimize Your QA Process with Alphabin - Schedule a Demo Today!

FAQs

1. How does data-driven test reporting make QA easier?

Data-driven reporting uses AI to analyze test results, separating real issues from noise like UI changes and flaky tests. This lets teams quickly identify, prioritize, and address problems without sifting through overwhelming data.

2. Why do test reports often show so many failures?

Test reports include failures from different sources, such as new bugs, UI updates, or unstable tests. Advanced reporting tools help clarify which failures matter most, reducing wasted time on non-critical issues.

3. How can I reduce the time spent on manual QA reporting?

Automated analytics tools sort failures, spot patterns, and generate reports in real time. This automation frees up your team to focus on test improvements and bug fixes, rather than collecting and formatting data by hand.

4. How does Alphabin help improve quality assurance?

Alphabin’s AI-driven tools deliver real-time dashboards and tailored analytics, helping teams detect issues earlier and allocate resources more effectively, leading to more predictable and high-quality release cycles.

.svg)

.webp)